EmoLand

A game-based system supporting children's emotional development

Introduction

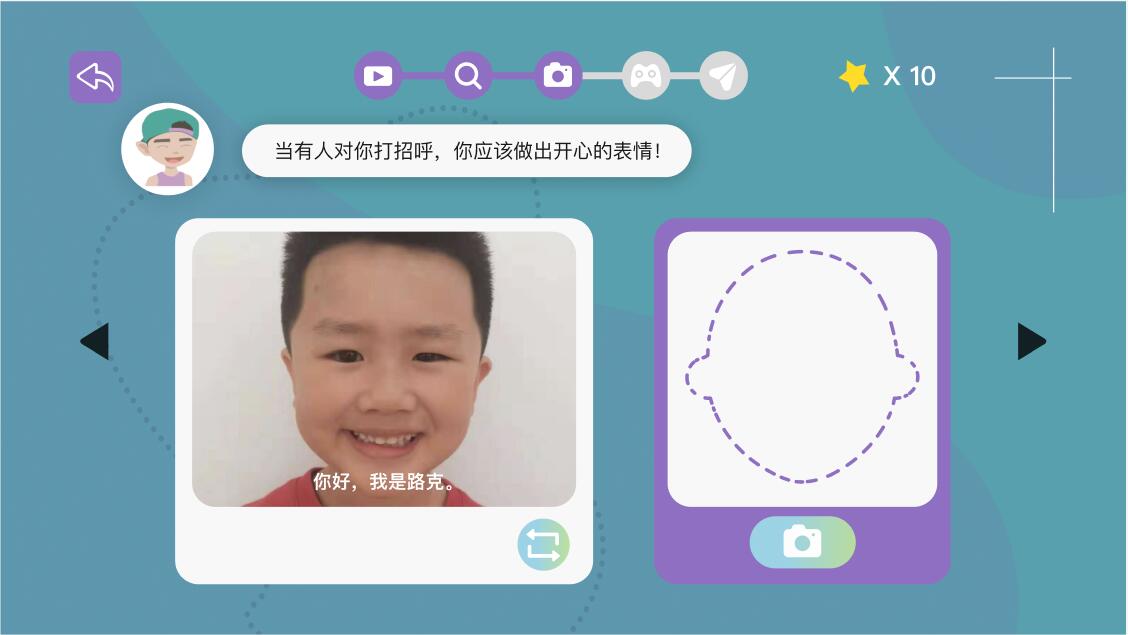

- Children’s emotional skills are crucial for their success. However, several children, such as those with ASD, struggle with understanding social contexts and recognizing and expressing facial expressions. We present the design of EmoStory, a game-based interactive narrative system that supports children’s emotional development. The system utilizes animation and emotional sounds to teach children six basic emotions and facial expressions within various social contexts. It also provides multi-level games for systematic practice of the learned skills. By incorporating facial expression recognition techniques and designing animated visual cues for key facial movement features, the system helps children practice facial expressions and offers explicit guidance during tasks.

- Based on several pilot studies, we revised EmoStory and updated it as EmoLand. EmoLand consists of two main parts: EmoStory (the previous version) and EmoGame (the newly added component). EmoGame is a collection of smaller game-based activities that allow children to practice specific skills based on their personalized needs. For instance, teachers can select a particular type of practice and specify the number of trials for the child to engage in. These activities are designed to reinforce the lessons taught in EmoStory while also allowing for more flexible and personalized usage.

- Our system encompasses four main design features:

- Animation and narratives: We employ narratives that incorporate visual and auditory content to help children with autism understand the connection between social contexts and emotions. Narratives play a central role in constructing social meanings and are frequently employed in interventions for children with autism.

- Multi-level games: We design games that gradually increase in difficulty to provide structured learning tasks. These games are integrated into the narrative to encourage children to reflect on their acquired knowledge and put it into practice.

- Real-time feedback based on facial expression recognition:We assist children in practicing facial expressions in various simulated contexts by monitoring their own facial expressions. The system captures camera frames at a rate of 24 frames per second to analyze facial expression recognition. Our facial expression recognition module is built upon a Convolutional Neural Network trained using the Child Affective Facial Expression Set, which contains 1200 photographs of over 100 children (ages 2-8) exhibiting our target expressions.

- Models of design and learning discrepancy: We provide different learning tasks, such as facial recognition matching tasks and facial expression expressing tasks. These tasks can be utilized independently for trial practice in EmoGame or used together as structured and sequential activities in EmoStory. Learning discrepancy refers to the ability of tutors to control the number of trials and the difficulty of the tasks based on each child’s abilities. This provides tutors with greater flexibility in managing the training process according to each child’s characteristics.

Pictures & Videos

播放视频

Papers and Awards

- Fan, M.*, Fan, J., Jin, S., Antle, A.N., Pasquier, P. EmoStory: A Game-based System Supporting Children’s Emotional Development. In Extended Abstracts of Proceedings of Conference on Human Factors in Computing Systems (CHI’ EA 18), ACM Press, Montreal, April 21-16, 2018, LBW058, 1-4.

- Fan, M.*, Tong, X., Wen, Yalcin, N., Kim, L., Wu, Z., Benton L. Designing AI Interfaces for Children with Special Needs in Educational Contexts. In Proceedings of the 22nd Annual ACM Interaction Design and Children Conference (IDC ’23). ACM Press, New York City, USA, June 19-23, 20223, 801–803.

- Fan, M.*, Alissa A.N., Zhicong Lu. The Use of Short-Video Mobile Apps in Early Childhood: a Case Study of ParentalPerspectives in China. Early Years, 1-15.